- 14 July 2020

- C#, Architecture, Signals Network, K8s

Designing a scalable and secure trading platform on top of Kubernetes

From the first moment I began working as a lead software architect at Signals Network, I was extremely excited about the software we had planned to build — an online platform, where users can program and run their trading strategies using just their browsers. At the same time, I knew I would have to deal with quite specific requirements for a component, which would be responsible for the execution of user-coded strategies.

The component had to meet the following criteria:

- Automated strategy deployment

Execution of a strategy coded in the web editor must be a fully automated process, without any manual steps or configurations. - Parallel execution

All deployed strategies must be subscribed to the market data events (new trade, new price, etc.) and process them in real-time. This must happen for all strategies at once, as soon as the event is received from the exchange. - Scalability

The component for strategy execution must be horizontally scalable — i.e. if there won’t be enough resources to run more strategies, we can just throw in another server and scale it out. - Security

We had to figure out how to execute the strategies while keeping them isolated from the rest of our services or other strategies. In the end, the trading strategy is a piece of untrusted C# code provided by the end-user, which we need to run on our infrastructure. Even if we restricted the set of assemblies available in our online code editor, a strategy implementation could still contain some malicious code. We just couldn’t take the risk that some misbehaving strategy will affect our trading platform. - Resources limits

There should be a way to define limits for resources that a strategy could consume, as we don’t have full control over the strategies’ code. It could happen that some buggy or poorly programmed strategy could eat all the free RAM or too much CPU and affect other services as well.

What about the Actor Model?

I would guess that anybody who has experience with building some trading, online gaming, social media, or complex event stream processing applications, has heard about the Actor Model and what benefits it brings to distributed computing architecture.

When joining Signals Network, I already had some experience with building a C# application for automated trading on smaller crypto exchanges. In the application, I was using the Akka.NET framework, which meets the requirements for automated strategy deployment, parallel execution, and scalability. Originally, I aimed to use the features of Akka.Net for the real-time trading functionality in Signals Network as well, but it did not provide any way to limit the resources consumed by individual actors or to isolate the strategy execution process to the level we needed.

Let’s check the Docker containers

Except for the VMs, which were inefficient for our use-case, the most secure way I could think about, was running each strategy as an isolated .Net Core service in its own Docker container, which can be secured even more with a module like AppArmor to prevent strategy code from executing some malicious actions.

Docker is designed to run multiple containers, so there shouldn’t be an issue with the parallel execution of multiple strategies. It also provides ways to set resources limits for each container. However, to prove this concept, I needed to implement the automated strategy deployment functionality — i.e. find a way to dynamically start the strategy execution container when the user clicks on the “deploy strategy” button in the UI. Using just the docker, the solution would definitely involve some low-level bash scripts, which are often hard to maintain and debug. It would definitely be better to reuse functionality already embedded in some of the container orchestration platforms.

Bring Kubernetes to the table

Luckily, at the time I was cracking this problem, Kubernetes was around for quite a while and we were already using it to host our microservices. I decided to take a better look under its hood, to check how it will meet our needs for the strategy execution component.

Kubernetes clusters are horizontally scalable, and I discovered that it also provides a way to set CPU and memory limits for the pods. Regarding security, besides the fact that each service runs in an isolated Docker container, Pod Security Policies allowed us to define restrictive rules for accessing the filesystem, network, system calls, etc. As the pods with various strategies are running all at once, this setup also meets the parallel execution requirement.

The last piece of the puzzle was the automated strategy deployment. As a programmer, I was already familiar with the Kubernetes command-line tool Kubectl. However, to use it from our .Net Core service subscribed to the commands from the web application, I would have to start external processes from my C# code, which is always a little bit tricky and a dangerous thing to do. Fortunately, Kubernetes has also fully-featured REST API with a bunch of client libraries written in various languages, including C# as well. With API endpoints providing functionality to create/update/delete a deployment, start a pod, or get the status of all containers in the cluster, there was nothing blocking me from programming our strategy execution service.

Final design

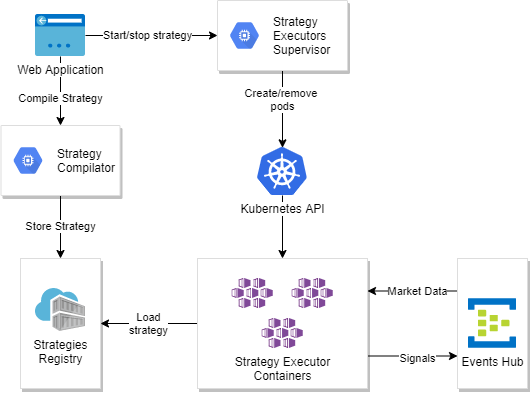

The final architecture of the strategy execution component looks like this:

(infrastructure and implementation details omitted for brevity)

Strategy Executors Supervisor is a .Net Core service responsible for the management of Strategy Executor Containers. It uses the Kubernetes C# Client to dynamically start or stop the executors according to the requests received from the Web Application. Every executor container runs an instance of Strategy Executor Service, which dynamically loads the specified strategy from Strategies Registry, subscribes to market events, and runs the strategy until it’s stopped.

Since we released the demo version of the Signals platform, the implementation of the strategy execution component improved significantly but the overall architecture stayed the same, as it still satisfies all our requirements. We are just using more Kubernetes features like container lifecycle callbacks, persistent volumes, liveness/readiness/startup probes, and other mechanisms to easily recover from failures and keep the strategies always running.

Comments

Latest Posts

-

Designing a scalable and secure trading platform on top of Kubernetes

14 July 2020, C#, Architecture, Signals Network, K8s -

Leveraging Hexagonal architecture in Signals Framework’s design

24 June 2019, C#, Architecture, Signals NetworkI created this technical article for Signals company in cooperation...

-

Breaking down the Signals Platform

11 April 2019, C#, Architecture, Signals NetworkI created this technical article for Signals company in cooperation...

-

Don't block your threads with IO bound operations

4 February 2017, C#, AsyncMany of us use the async/await feature in C# projects,...